A few years ago, a friend described the rules of a game called Hex in a WhatsApp message, and asked me if I could prove that this game can never end in a draw. Strange question if you aren’t into maths - but for some reason this type of challenge seems to pique the imagination of the mathematically-inclined in a way which can sometimes be hard to explain. I’d like to show here the standard solution to this problem, and hopefully get across a sense of why questions like this can be so compelling.

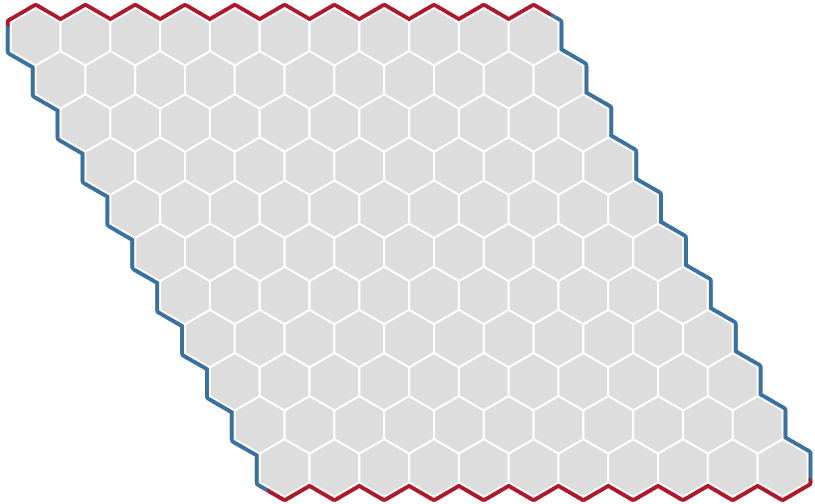

Hex is played on a board that looks like this:

The size of the board varies, but 11x11 is most usual. Two players, red and blue, take turns placing counters of their colour onto unoccupied spaces (the hexagons) in the grid. Once placed, the counters never move. The winner is simply the first person to create an unbroken chain of counters connecting their two sides. For example, a game won by red might look like:

To get a feel for the game before proceeding, try having a few games against a computer opponent or a friend here. I also created a more visually appealing board you can use to play with another human on Observable1 here - there’s no AI to play against, but this is a cool project for the future!

The first point to note, which seems intuitively obvious, is that presumably one can never create an arrangement of red and blue counters such that both colours have a winning chain across the board (even if we relax the assumption that the game ends as soon as one of the players achieves a winning chain, thereby giving the other player time to finish their own). This is because a winning red chain (as in the diagram above) would have to intersect any also-winning blue chain traversing the board left-to-right.

The Hex Theorem

So far so straightforward. The real goal here is to prove that the game can never end in a draw - a result known as the Hex Theorem. This seems a fair bit harder - such a scenario would arise if the two players kept placing counters on the board until the whole grid was filled up, with neither player ever succeeding in creating a winning chain. So the question reduces to: can you prove that if a Hex grid is filled entirely with red and blue counters, there absolutely MUST be a winning chain somewhere on the grid?

(Aside: note that this is actually a marginally stronger statement than the original, because it would prove that any arrangement of counters at all must contain a winning chain somewhere on the grid. In a real game of Hex, only a small subset of all arrangements can actually arise, because the players take it in turns and therefore the game ends with an equal number of red and blue counters. Nevertheless, if we prove the more general case, we’ve still proven the original Hex Theorem).

We’ve now reached the point which, to me, explains the unreasonable curiosity-captivating quality of seemingly mundane and pointless questions like this. We’ve basically been asked to prove that, within the confines of a simple set of rules, a particular occurrence (a winning chain) absolutely MUST happen regardless of the near infinitude of leeway afforded to the players in their choices of moves at each step.

Pursuing that idea a little further, it’s actually quite easy to write down an upper-bound for2 the total number of possible Hex games for a given board of side length . Such a board has unoccupied spaces at the beginning of the game, and the first player can choose any one of them. After that, the second player can choose from the remaining unoccupied spaces, and so on. To get the total number of possible games we simply multiply together these values until the board is filled. This gives (-squared factorial). This number is approximately for an 11x11 grid. It’s hard to describe how vast that number is, but for some semblance of context, there are thought to be approximately atoms in the observable universe. So the number of games of Hex is (quite considerably) more than the square of the number of atoms in the observable universe. In other words if you replicated our universe once for each atom in the universe, the total number of atoms in that “squared” universe would still be far, far less than the possible number of Hex games.

Of course, if we had infinite time, patience and scratch paper, we could make an attempt to enumerate every single possible case (it’s a finite number, after all, even if stupendously large). Then, once we had written down every possible case, we could proceed to identify the winning chain for every single diagram, and verify that there is indeed a winning chain in every case.

The problems with such an approach:

-

It takes ages, and there are not enough atoms in the universe to write everything down

-

It can only be accomplished for a fixed board size, or a finite selection of board sizes. I want you to prove that this result holds no matter what size the board is

-

It teaches us nothing deeper about why the result is true.

In the end, we’ll be able to give a neat and somewhat ingenious string of logical steps to show that, no matter how hard you try, you won’t be able to find an arrangement of blue and red counters filling up the grid which doesn’t somewhere contain a winning chain for one of the two sides. I think my sense of awe at the power and beauty of mathematics frequently comes down to something analogous to this: instead of enumerating an infinitude of possibilities to understand a phenomenon, we are frequently able to find a clever chain of reasoning which proves beyond any doubt at all that the statement of interest is always true, even when that statements is infinite in scope. We surmount seemingly impossible odds through the application of ingenuity.

To start the proof, let’s now take a completely filled (at random) Hex grid:

The first step will be to invent a construction on top of this grid which will be well-defined and regarding which we’ll manage to prove some interesting but fairly straightforward properties. The construction involves drawing a line along the white edges between the counters according to the following rules:

-

Start at a corner (where both red and blue meet)

-

Draw the line so that it always passes between differently coloured counters (when we’re near the edge of the board, I’m treating the coloured edge as a ‘counter’ - you could also just imagine that the game is played with an extra row of counters already assigned to red along two of the sides and blue along the other two sides)

I’ve started filling in this line in black below:

The astute reader might immediately object: “but what if I can’t always do that? How do you know the line won’t eventually reach a place somewhere in the grid where there’s nowhere else to go that satisfies the ‘pass between different colours’-rule? Or equally, what if the line arrives at a point where it has a choice of two different directions? Where does it go then?”

These questions get to the heart of a notion frequently encountered in mathematics known as “well-definedness”. One can easily postulate the existence of entities like the line I told you to draw simply by providing a rule for its construction, but this rule in no way guarantees that such a thing must always exist or be uniquely specified by the rule. We have to demonstrate those things explicitly.

First, we can show that any path drawn according to this rule would never self-intersect or loop back on itself.

To see this, consider the following diagram.

Here we depict a general ‘vertex’ from somewhere along the path. A vertex is really just a point where three of the grid’s hexagons meet. We know that the path must approach the vertex along a line with red on one side and blue on the other side (this is the defining feature of the line’s construction). Now the third hexagon could be of either colour, but it must, of course, be one of the two. Whichever it is, the path will continue in exactly one of the two available directions. In the diagram above, for example, if the ‘?’ is a red hexagon, then the line must continue to the left. If it’s blue, the line must continue to the right. Whichever of these turns out to be the case, the third so-far-untraversed line will pass between two counters of the same colour. And for this reason, it can never form a future part of the path (even much later on in the journey) because that would violate the construction rule of the path. Therefore, the path never loops or self-intersects. There is also never an ambiguity about where to go next.

Notice also that this same argument provides a clear demonstration that the path could never terminate somewhere in the middle of the grid (where the vertices always have three lines emanating from them). In fact, this means that the path can only possibly terminate at a corner, where the vertices have only two lines emanating. You can see this in action on the diagram where I drew the start of the path.

The next observation we can make about these paths is that, running the entire length of the path, the counters are the same colour on both sides. For example, in the diagram above, if you were an ant following along the path you would always have a red counter on your left and a blue counter on your right. It should be fairly clear from the construction rule of the path that this must always be the case. The zoomed-in diagram of the three counters around one vertex should also help you to see that this is so.

One final observation before we consider a full-blown path in our particular case: we know that the path starts and ends at two corners (but not the same corner, because there are no loops). We also know that the colours of counters remain the same along the full lengths of each side of the path. So consider: can the path start and end at diagonally-opposite corners? If you go back and inspect the diagram, you should notice that this can’t actually happen because the colours would have to be reversed on the final segment, contradicting what we know about the colours staying the same along the full length. This contradiction shows us that the path must start and end at adjacent corners.

So to summarise, we’ve concluded the following:

- The path is unique and well-defined

- It does not loop or self-intersect

- The path has red counters along the full length of one side and blue counters the other side

- It must start and end at the corners of the grid, and moreover, they must be adjacent corners, not diagonally-opposite.

With all this in mind, I’ve filled in the entire length of the path for this particular arrangement of red and blue counters. (Note that doing so is just to assist our visualisation and thinking about the properties of these paths in general. Any particular feature of this exact path could be idiosyncratic to the specific random arrangement we used to generate it, which is why we were careful to provide watertight arguments derived only from the construction rule itself, before even looking at this diagram for confirmation).

You’ll notice that this particular path satisfies all our deductions - no looping or intersecting, starts and ends at adjacent corners, same-coloured counters along the full length of both sides of the path.

Looking at this picture, we should now see how the final step in the proof goes. We’ve constructed a line through the grid which connects adjacent corners (in this case top-left and bottom-left). This means that our path is forming an unbroken barrier between the two blue sides of the grid (in this case). But since we also know that that very line has red counters running along the entire length of one side of it, we have identified an unbroken chain of red counters which traverse the grid in the other direction (i.e. the red direction). And of course, these observations would hold for any general path constructed in this way for any given grid. Which proves what we wanted - there is always a winning chain somewhere (indeed, we were able to provide an algorithmic way to actually find it on the board). QED.

Brouwer’s Fixed Point Theorem

For me, this proof is already satisfying enough - I tried to express why it’s so appealing to tackle and solve a problem which has such a simple statement (a small child can probably understand the question) but yields such a mind-bendingly complex set of possibilities (a small child would be something of a prodigy to dream up a proof like this from scratch). Hopefully, if you’re not already into maths, this sketch of what mathematical proofs often look like has given you some kind of taste for what draws people past high school maths and into higher studies of the subject. At that level, it becomes more about creatively seeking beautiful logical reasoning than merely learning to apply rote recipes like long division or integration.

But - there’s more. It actually turns out that the proof sketched out above has a deep connection to a result in mathematics called Brouwer’s Fixed Point Theorem. In fact, it’s possible to demonstrate that the above result is precisely equivalent to the two-dimensional version of this important result in topology. In brief, the Brouwer Fixed Point Theorem states that a continuous function3 from a set to itself must have a fixed-point - that is, some input to the function does not get transformed at all by the function4.

Here are a couple of surprising consequences of this possibly arcane-sounding theorem:

-

If you stir a cup of coffee, there is a molecule of coffee at the end which is in the same place it started. This is because the coffee continues to occupy the same physical space (which is our input and output set), and the operation of stirring coffee is a continuous function, which takes elements from the input set (i.e. locations in the cup) back to other elements of the same set (locations in the same cup). Brouwer’s Fixed Point Theorem immediately yields the result: there exists a fixed point of this “function”, meaning that some input element got mapped to the same place it started. So somewhere in the cup, a molecule of coffee wound up exactly where it began. This is completely inescapable, as long as the operation remains continuous, and all the coffee stays in the cup.

-

If you crumple up a piece of paper, and lay it over the top of an identical piece of flat paper, there is a point in the crumpled sheet which is precisely above its “partner” in the uncrumpled sheet. Again, this is because the act of crumpling up a sheet of paper (as long as you don’t tear it) is a continuous mapping of the points. So if you lay the crumpled sheet over the top of the flat one (and ensure there is no overhang outside the edges) then you must have a “fixed point” which has not moved in the operation of crumpling.

-

If you walk up a path on a mountain, starting at noon on Monday and reaching the summit at midnight, and then you walk back down the following day, setting off at noon on Tuesday and arriving at midnight, there is a moment on Tuesday where you are at the exact same spot you were at the same time on Monday. Note that this says nothing about your approach to walking up and down. You do not need to walk at a consistent pace. You could race up the mountain on Monday and then take a meandering pace on Tuesday with the occasional sprint followed by a long break where you admire the view. You could even retrace your footsteps back to the summit to collect your forgotten raincoat, before resuming the descent once again. Any way you slice it, the statement always holds. You can try to prove this one directly for yourself - start by drawing a diagram with time (from 12 noon to 12 midnight) on the x-axis, and altitude up the mountain on the y-axis. Then superimpose your meanderings up and down the mountain from both days onto the same chart. Once you’ve done that, it should be fairly clear how to prove the statement.

In summary, Brouwer’s Fixed Point Theorem gives us a pretty strong existence statement that applies to many important functions that arise in areas across maths and the sciences (not just the whimsical ones suggested above!) Once mathematicians prove a statement like this, it’s simply guaranteed to be true forever and we can immediately whip this fact out whenever we have a function which meets the criteria - which is actually not at all uncommon. This can help give us an important step for free in our efforts to prove other results. For example, John Nash (who incidentally created Hex), used the Brouwer Fixed Point Theorem in some of his important contributions to game theory.

It’s finally worth mentioning that to the mathematically-inclined, these sorts of “existence theorems” are just pretty cool. You get to immediately rely on the existence of a very interesting and unique property of a function, without having to identify where it is - you just need to know that the function satisfies some basic prerequisites.

A Final Hex Result: Strategy Stealing…

One of my favourite hobbies is chess. The game is sufficiently complex that it’s not known whether white or black would win the game (or, more likely, if it would be a draw) assuming perfect play from both sides. But that question does have an answer, and to find the answer would be to “solve chess”. In the game of Tic-Tac-Toe (Naughts and Crosses), the rules and possibilities are simple enough that one can fairly easily show that the game is a forced draw if both sides play properly.

It turns out that, although Hex has far more combinatorial explosion than Tic-Tac-Toe (as described earlier), there is another ingenious argument which lets us see that this game is actually a forced win for the first player. The argument relies on a clever idea called strategy stealing. It goes roughly like this:

-

We have already discovered that it is impossible for the game to end in a draw. Therefore either the first or second player must win.

-

Hex is a perfect information (i.e. there is no room for chance - given a position, you already know everything there is to know about it and you can, in principle, find out what the maximally favourable move is from that information). So there must be a winning strategy for either the first or second player.

-

Let us assume that the second player has a winning strategy.

-

The first player can now adopt the following approach. He makes an arbitrary move. Thereafter he plays the winning second player strategy assumed above, ignoring his own first counter. If in playing this strategy, he is required to play on the cell where an arbitrary move was made, he makes another arbitrary move. In this way he plays the winning strategy with one extra piece always on the board.

-

This extra piece cannot interfere with the first player's imitation of the winning strategy, for an extra piece is always an asset and never a handicap in the game of Hex. Therefore, the first player can win.

-

Because we have now contradicted our assumption that there is a winning strategy for the second player, we are forced to drop this assumption.

-

Consequently, there must be a winning strategy for the first player.

Another cool existence proof! We know the first player can win, but we don’t actually have the details for how to work out the right move in a given position. I think there’s something fascinating about that.

Next Steps

I haven’t been able to find a nice place to play Hex online (the link I provided at the top is pretty old and not very visually appealing!), so in the future it would be fun to set up a page with a Hex game based on the code I wrote in the Observable notebook.

Once that exists, it would be cool to experiment with creating a Hex-playing AI, so you aren’t restricted to just playing with other humans. I've written a basic chess-playing algorithm in the past, and this would be very similar (since Hex is another turn-based perfect-information game, like chess). The general approach involves traversing a subset of the game tree, but falling back on some heuristics to evaluate later positions since we can’t explore the whole thing (due to combinatorial explosion). Since the rules of the game are so simple, especially compared to chess, this project would probably be fairly straightforward.

Further Resources

-

This video by Mathologer5 demonstrates a proof of Sperner’s Lemma, another result very closely associated to the Brouwer Fixed Point Theorem. When my friend first sent me the Hex Theorem problem, my solution was a reworking of the technique shown in this video. I looked at the dual graph of the Hex grid (which is a triangulation like in the video) and used the same “doors” trick to construct the path we found in the main part of this article.

-

This paper gives a more formal version of the Hex Theorem proof, as well as a proof of its equivalence with the Brouwer Fixed Point Theorem.

- Observable is an awesome site which allows you to create Javascript notebooks in your browser, as well as sharing and collaborating on them with others. It was created by Mike Bostock who also created D3.js, a Javascript library for creating beautiful data visualisations.↩

- The argument given here is only an upper bound for actual games of Hex because real games end before the entire board has filled up. If we re-run the same maths, but assuming that the average game ends when about half the board has been filled up, we get that there are c. 10^80 possible games - which is the size of the universe in atoms.↩

- A function is a way of specifying how one quantity relates to another. For example, if you threw a ball into the air, you could describe its height above the ground as being “a function of time”, because at any given time (which is the input quantity) there is an associated height (the output quantity). A continuous function is something like what you get when you draw a line on a piece of paper without lifting the pen. There are no abrupt changes (discontinuities). Indeed, the example of throwing a ball into the air is an example of a continuous function, as are most physical processes in the real world.↩

- There are a few more conditions on the real theorem than I’ve given here. See the Wikipedia article for more detail.↩

- Mathologer is a really cool YouTube channel by Burkard Polster, a math professor at Monash University. He shows some great visual derivations of beautiful mathematical results for a general audience.↩